From Federated Learning to Decentralized Agent Networks: ChainOpera Project Analysis

This report explores ChainOpera AI, an ecosystem aimed at building a decentralized AI Agent network. The project originated from the open-source foundation of federated learning (FedML), was upgraded to a full-stack AI infrastructure through TensorOpera, and eventually evolved into ChainOpera, a Web3-based Agent network.

In the June research report "The Holy Grail of Crypto AI: Cutting-Edge Exploration of Decentralized Training", we mentioned Federated Learning, a "controlled decentralization" solution that sits between distributed training and fully decentralized training: its core is local data retention and centralized parameter aggregation, meeting privacy and compliance needs in fields such as healthcare and finance. Meanwhile, in our previous research, we have continuously focused on the rise of Agent Networks—their value lies in enabling complex tasks through the autonomy and division of labor among multiple agents, driving the evolution from "large models" to a "multi-agent ecosystem".

Federated Learning, with its principle of "data stays local, incentives based on contribution", lays the foundation for multi-party collaboration. Its distributed nature, transparent incentives, privacy protection, and compliance practices provide reusable experience for Agent Networks. The FedML team is following this path, upgrading its open-source DNA to TensorOpera (AI industry infrastructure layer), and further evolving to ChainOpera (decentralized Agent Network). Of course, Agent Networks are not necessarily a direct extension of Federated Learning; their core lies in autonomous collaboration and task division among multiple agents, and can also be built directly on multi-agent systems (MAS), reinforcement learning (RL), or blockchain incentive mechanisms.

I. Federated Learning and AI Agent Technology Stack Architecture

Federated Learning (FL) is a framework for collaborative training without centralized data aggregation. Its basic principle is that each participant trains the model locally and only uploads parameters or gradients to a coordinator for aggregation, thus achieving privacy compliance by ensuring "data never leaves its domain". After being practiced in typical scenarios such as healthcare, finance, and mobile devices, Federated Learning has entered a relatively mature commercial stage, but still faces bottlenecks such as high communication overhead, incomplete privacy protection, and low convergence efficiency due to device heterogeneity. Compared to other training modes, distributed training emphasizes centralized computing power for efficiency and scale, decentralized training achieves fully distributed collaboration through open compute networks, while Federated Learning sits between the two, representing a "controlled decentralization" solution: it meets industry needs for privacy and compliance, provides a feasible path for cross-institutional collaboration, and is more suitable for transitional deployment architectures in the industrial sector.

In the overall AI Agent protocol stack, as outlined in our previous reports, it can be divided into three main layers:

-

Infrastructure Layer (Agent Infrastructure Layer): This layer provides the foundational runtime support for agents and is the technical cornerstone for building all Agent systems.

-

Core Modules: Includes Agent Framework (agent development and runtime framework) and Agent OS (lower-level multitasking scheduling and modular runtime), providing core capabilities for agent lifecycle management.

-

Support Modules: Such as Agent DID (decentralized identity), Agent Wallet & Abstraction (account abstraction and transaction execution), Agent Payment/Settlement (payment and settlement capabilities).

-

Coordination & Execution Layer: Focuses on collaboration, task scheduling, and incentive mechanisms among multiple agents, and is key to building "collective intelligence" in agent systems.

-

Agent Orchestration: The command mechanism for unified scheduling and management of agent lifecycles, task allocation, and execution processes, suitable for workflow scenarios with centralized control.

-

Agent Swarm: A collaborative structure emphasizing distributed agent cooperation, with high autonomy, division of labor, and resilient collaboration, suitable for complex tasks in dynamic environments.

-

Agent Incentive Layer: Builds the economic incentive system for the agent network, motivating developers, executors, and validators, and providing sustainable momentum for the agent ecosystem.

-

Application & Distribution Layer

-

Distribution Subclass: Includes Agent Launchpad, Agent Marketplace, and Agent Plugin Network

-

Application Subclass: Covers AgentFi, Agent Native DApp, Agent-as-a-Service, etc.

-

Consumer Subclass: Mainly Agent Social / Consumer Agent, targeting lightweight consumer social scenarios

-

Meme: Speculative use of the Agent concept, lacking actual technical implementation and application, driven only by marketing.

II. Federated Learning Benchmark FedML and TensorOpera Full-Stack Platform

FedML is one of the earliest open-source frameworks for Federated Learning and distributed training, originating from an academic team (USC) and gradually commercialized as the core product of TensorOpera AI. It provides researchers and developers with cross-institution, cross-device data collaborative training tools. In academia, FedML frequently appears at top conferences such as NeurIPS, ICML, and AAAI, and has become the general experimental platform for Federated Learning research; in industry, FedML enjoys a strong reputation in privacy-sensitive scenarios such as healthcare, finance, edge AI, and Web3 AI, and is regarded as the benchmark toolchain in the field of Federated Learning.

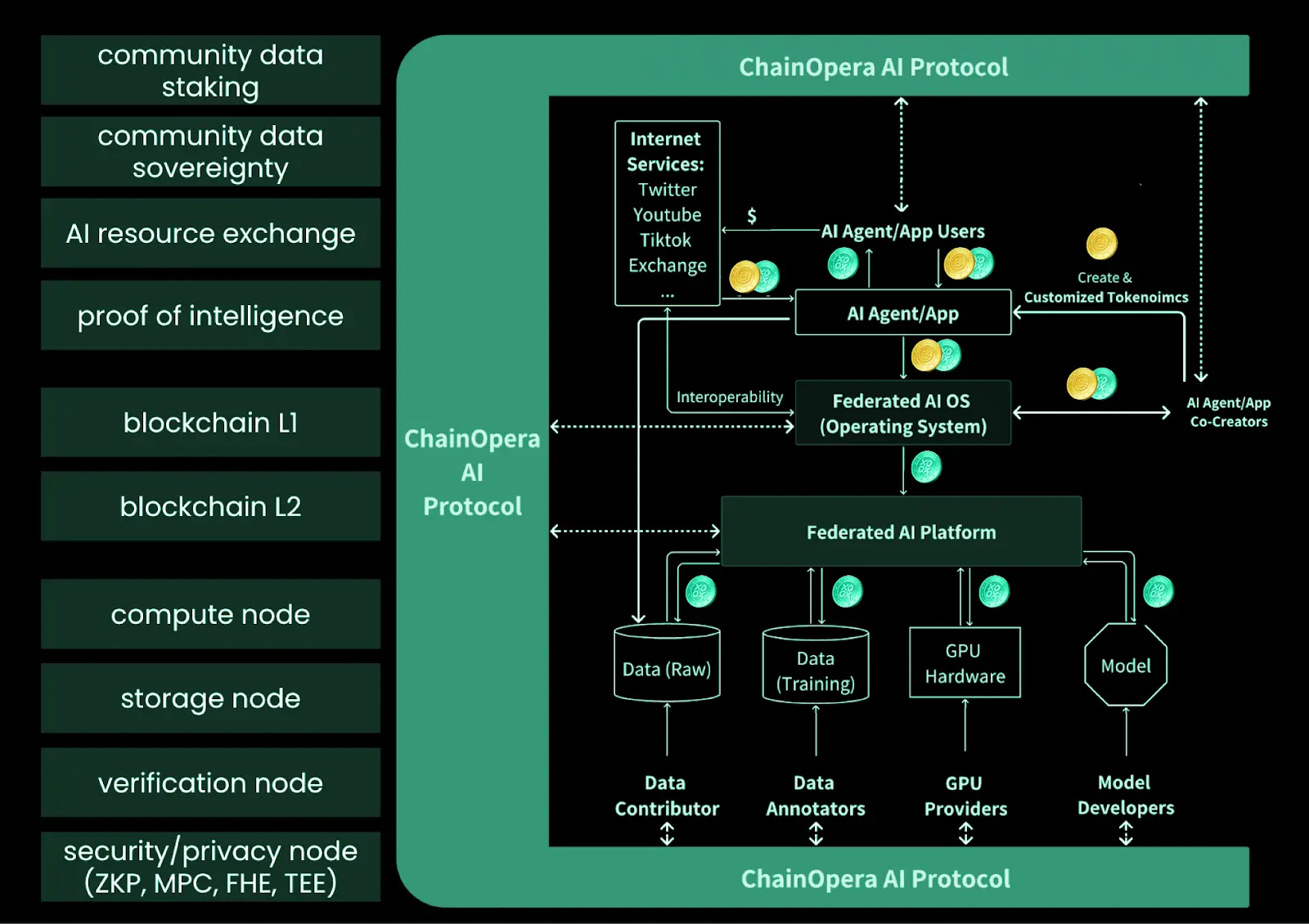

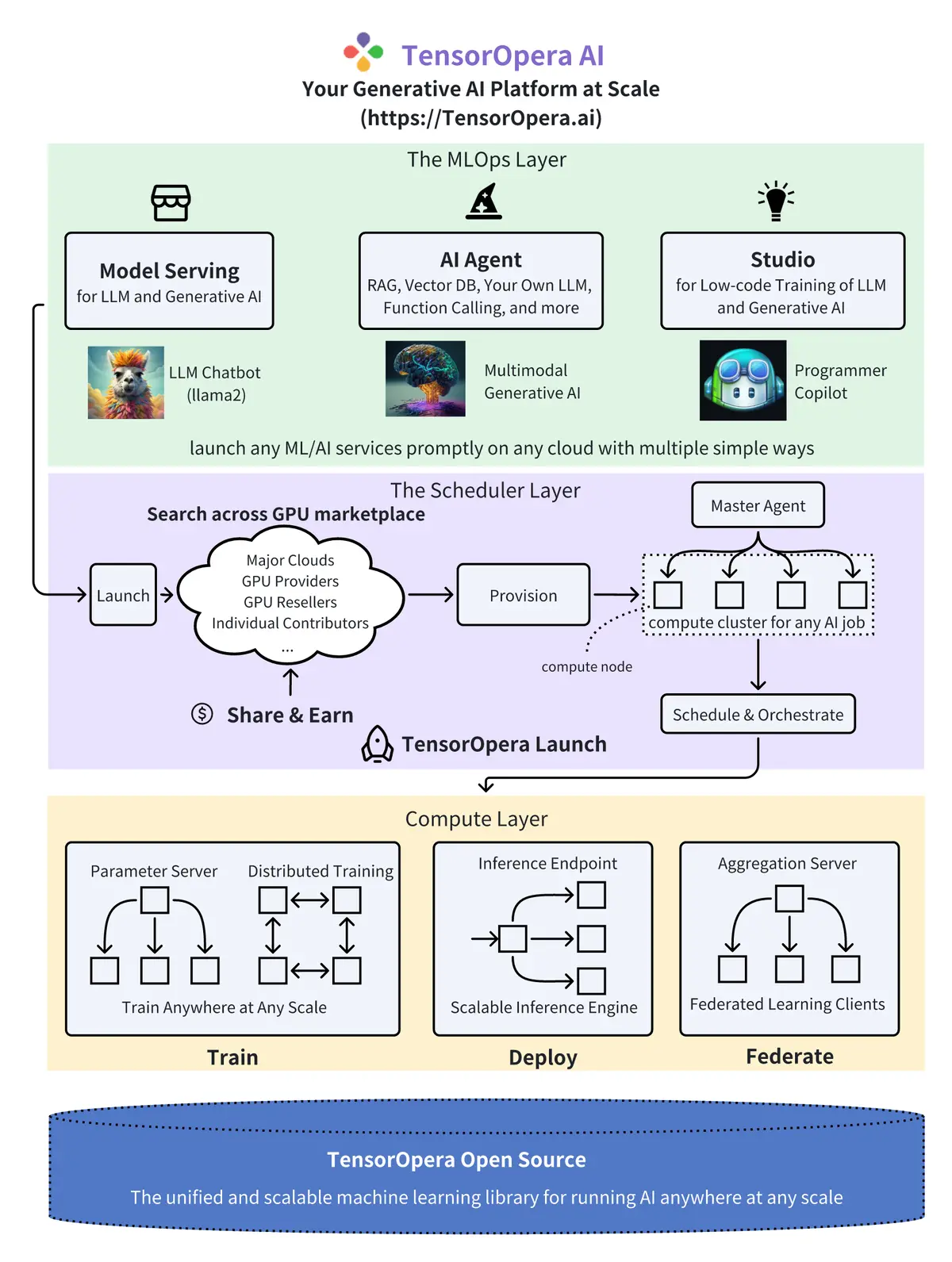

TensorOpera is the commercial upgrade of FedML, evolving into a full-stack AI infrastructure platform for enterprises and developers: while retaining Federated Learning capabilities, it expands to GPU Marketplace, model services, and MLOps, thus entering the broader market of large models and the Agent era. The overall architecture of TensorOpera can be divided into three layers: Compute Layer (infrastructure), Scheduler Layer (scheduling), and MLOps Layer (application):

1. Compute Layer (Base Layer)

The Compute layer is the technical foundation of TensorOpera, continuing the open-source DNA of FedML. Core functions include Parameter Server, Distributed Training, Inference Endpoint, and Aggregation Server. Its value lies in providing distributed training, privacy-preserving Federated Learning, and scalable inference engines, supporting the three core capabilities of "Train / Deploy / Federate", covering the complete chain from model training and deployment to cross-institution collaboration, and serving as the platform's foundational layer.

2. Scheduler Layer (Middle Layer)

The Scheduler layer acts as the hub for compute resource trading and scheduling, consisting of GPU Marketplace, Provision, Master Agent, and Schedule & Orchestrate, supporting resource calls across public clouds, GPU providers, and independent contributors. This layer is the key turning point for FedML's upgrade to TensorOpera, enabling larger-scale AI training and inference through intelligent compute scheduling and task orchestration, covering typical scenarios for LLMs and generative AI. Meanwhile, the Share & Earn model in this layer reserves incentive mechanism interfaces, with the potential to be compatible with DePIN or Web3 models.

3. MLOps Layer (Top Layer)

The MLOps layer is the service interface directly facing developers and enterprises, including modules such as Model Serving, AI Agent, and Studio. Typical applications cover LLM Chatbots, multimodal generative AI, and developer Copilot tools. Its value lies in abstracting underlying compute and training capabilities into high-level APIs and products, lowering the usage threshold, providing ready-to-use agents, low-code development environments, and scalable deployment capabilities. It is positioned to benchmark new-generation AI Infra platforms such as Anyscale, Together, and Modal, serving as a bridge from infrastructure to applications.

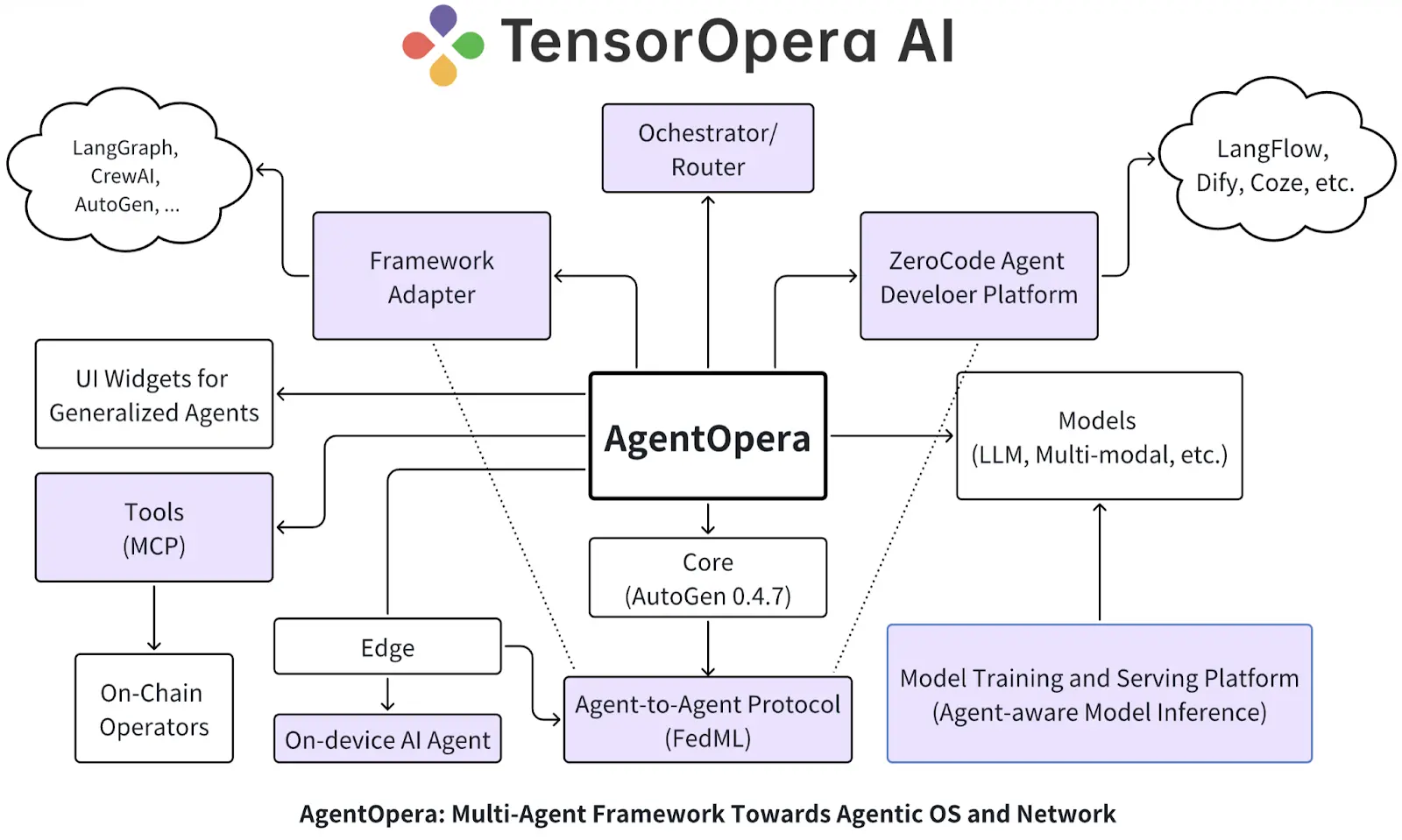

In March 2025, TensorOpera will be upgraded to a full-stack platform for AI Agents, with core products covering AgentOpera AI App, Framework, and Platform. The application layer provides a multi-agent entry point similar to ChatGPT, the framework layer evolves into an "Agentic OS" with graph-structured multi-agent systems and Orchestrator/Router, and the platform layer deeply integrates with the TensorOpera model platform and FedML to achieve distributed model services, RAG optimization, and hybrid edge-cloud deployment. The overall goal is to build "one operating system, one agent network", enabling developers, enterprises, and users to jointly build a new generation of Agentic AI ecosystem in an open and privacy-protecting environment.

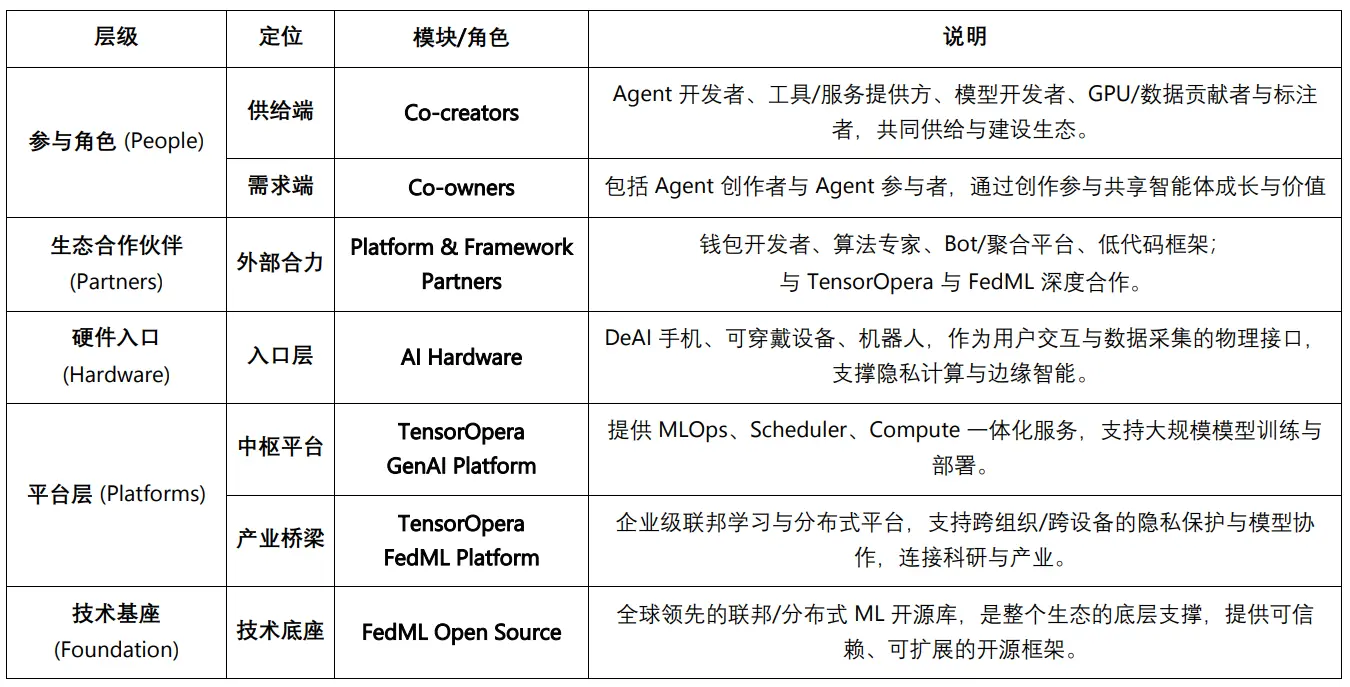

III. ChainOpera AI Ecosystem Panorama: From Co-creators and Co-owners to Technical Foundation

If FedML is the technical core, providing the open-source DNA for Federated Learning and distributed training; TensorOpera abstracts FedML's research achievements into a commercializable full-stack AI infrastructure; then ChainOpera is the platformization of TensorOpera's capabilities "on-chain", building a decentralized agent network ecosystem through AI Terminal + Agent Social Network + DePIN model and compute layer + AI-Native blockchain. The core transformation is that TensorOpera still mainly targets enterprises and developers, while ChainOpera, leveraging Web3 governance and incentive mechanisms, brings users, developers, and GPU/data providers into co-creation and co-governance, making AI Agents not just "used", but "co-created and co-owned".

Co-creator Ecosystem

ChainOpera AI provides toolchains, infrastructure, and coordination layers for ecosystem co-creation through Model & GPU Platform and Agent Platform, supporting model training, agent development, deployment, and collaborative expansion.

Co-creators in the ChainOpera ecosystem include AI Agent developers (designing and operating agents), tool and service providers (templates, MCP, databases, and APIs), model developers (training and publishing model cards), GPU providers (contributing compute power via DePIN and Web2 cloud partners), and data contributors and labelers (uploading and labeling multimodal data). The three core supplies—development, compute, and data—jointly drive the continuous growth of the agent network.

Co-owner Ecosystem

The ChainOpera ecosystem also introduces a co-owner mechanism, enabling collaborative network construction through cooperation and participation. AI Agent creators are individuals or teams who design and deploy new agents via the Agent Platform, responsible for building, launching, and maintaining them, thus driving innovation in functions and applications. AI Agent participants come from the community, participating in the agent lifecycle by acquiring and holding Access Units, and supporting agent growth and activity through usage and promotion. These two roles represent the supply and demand sides, jointly forming a value-sharing and collaborative development model within the ecosystem.

Ecosystem Partners: Platforms and Frameworks

ChainOpera AI collaborates with multiple parties to enhance platform usability and security, with a focus on Web3 scenario integration: through the AI Terminal App, it integrates wallets, algorithms, and aggregation platforms to provide intelligent service recommendations; the Agent Platform introduces diverse frameworks and no-code tools to lower the development threshold; relies on TensorOpera AI for model training and inference; and establishes exclusive cooperation with FedML to support privacy-preserving training across institutions and devices. Overall, it forms an open ecosystem balancing enterprise-grade applications and Web3 user experience.

Hardware Entry: AI Hardware & Partners

Through partners such as DeAI Phone, wearables, and Robot AI, ChainOpera integrates blockchain and AI into smart terminals, enabling dApp interaction, on-device training, and privacy protection, gradually forming a decentralized AI hardware ecosystem.

Central Platform and Technical Foundation: TensorOpera GenAI & FedML

TensorOpera provides a full-stack GenAI platform covering MLOps, Scheduler, and Compute; its sub-platform FedML has grown from academic open source to an industrialized framework, strengthening AI's ability to "run anywhere, scale arbitrarily".

ChainOpera AI Ecosystem

IV. ChainOpera Core Products and Full-Stack AI Agent Infrastructure

In June 2025, ChainOpera officially launched the AI Terminal App and decentralized technology stack, positioning itself as the "decentralized version of OpenAI". Its core products cover four major modules: Application Layer (AI Terminal & Agent Network), Developer Layer (Agent Creator Center), Model & GPU Layer (Model & Compute Network), and CoAI Protocol & dedicated chain, covering the complete closed loop from user entry to underlying compute and on-chain incentives.

The AI Terminal App has integrated BNBChain, supporting on-chain transactions and DeFi scenario agents. The Agent Creator Center is open to developers, providing MCP/HUB, knowledge base, and RAG capabilities, with community agents continuously joining; it also initiated the CO-AI Alliance, linking partners such as io.net, Render, TensorOpera, FedML, and MindNetwork.

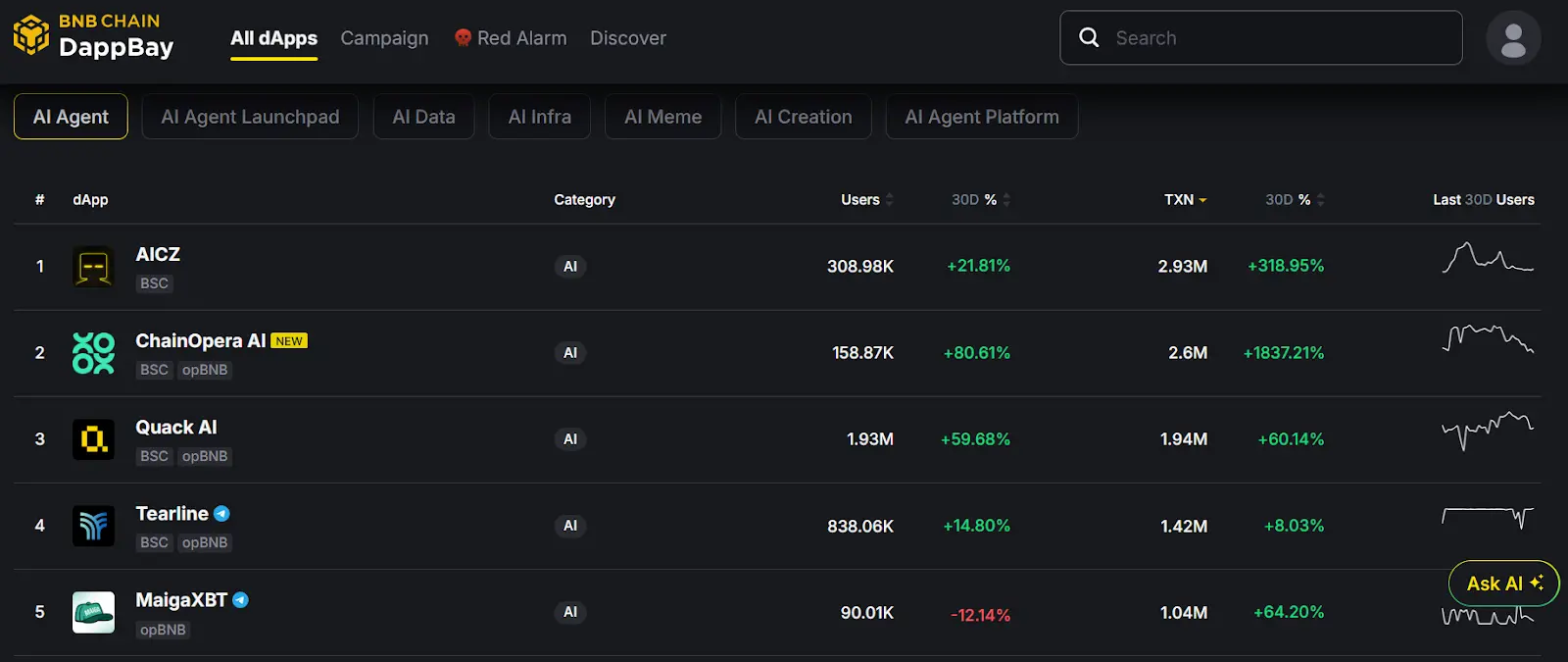

According toBNB DApp Bay on-chain data from the past 30 days, it has 158.87K unique users and 2.6 million transactions in the past 30 days, ranking second in the entire site in the BSC "AI Agent" category, demonstrating strong on-chain activity.

Super AI Agent App – AI Terminal

As a decentralized ChatGPT and AI social entry point, AI Terminal provides multimodal collaboration, data contribution incentives, DeFi tool integration, cross-platform assistants, and supports AI Agent collaboration and privacy protection (Your Data, Your Agent). Users can directly invoke open-source large models such as DeepSeek-R1 and community agents on mobile devices, with language tokens and crypto tokens transparently circulating on-chain during interactions. Its value lies in transforming users from "content consumers" to "intelligent co-creators", and enabling the use of dedicated agent networks in scenarios such as DeFi, RWA, PayFi, and e-commerce.

AI Agent Social Network

Positioned similarly to LinkedIn + Messenger, but targeting AI Agent groups. Through virtual workspaces and Agent-to-Agent collaboration mechanisms (MetaGPT, ChatDEV, AutoGEN, Camel), it promotes the evolution from single agents to multi-agent collaborative networks, covering applications in finance, gaming, e-commerce, research, etc., and gradually enhancing memory and autonomy.

AI Agent Developer Platform

Provides developers with a "Lego-style" creation experience. Supports no-code and modular expansion, blockchain contracts ensure ownership, DePIN + cloud infrastructure lowers the threshold, and the Marketplace offers distribution and discovery channels. Its core is to enable developers to quickly reach users, with ecosystem contributions transparently recorded and incentivized.

AI Model & GPU Platform

As the infrastructure layer, it combines DePIN and Federated Learning to solve the pain point of Web3 AI's reliance on centralized compute. Through distributed GPU, privacy-preserving data training, model and data marketplaces, and end-to-end MLOps, it supports multi-agent collaboration and personalized AI. Its vision is to drive the infrastructure paradigm shift from "big tech monopoly" to "community co-construction".

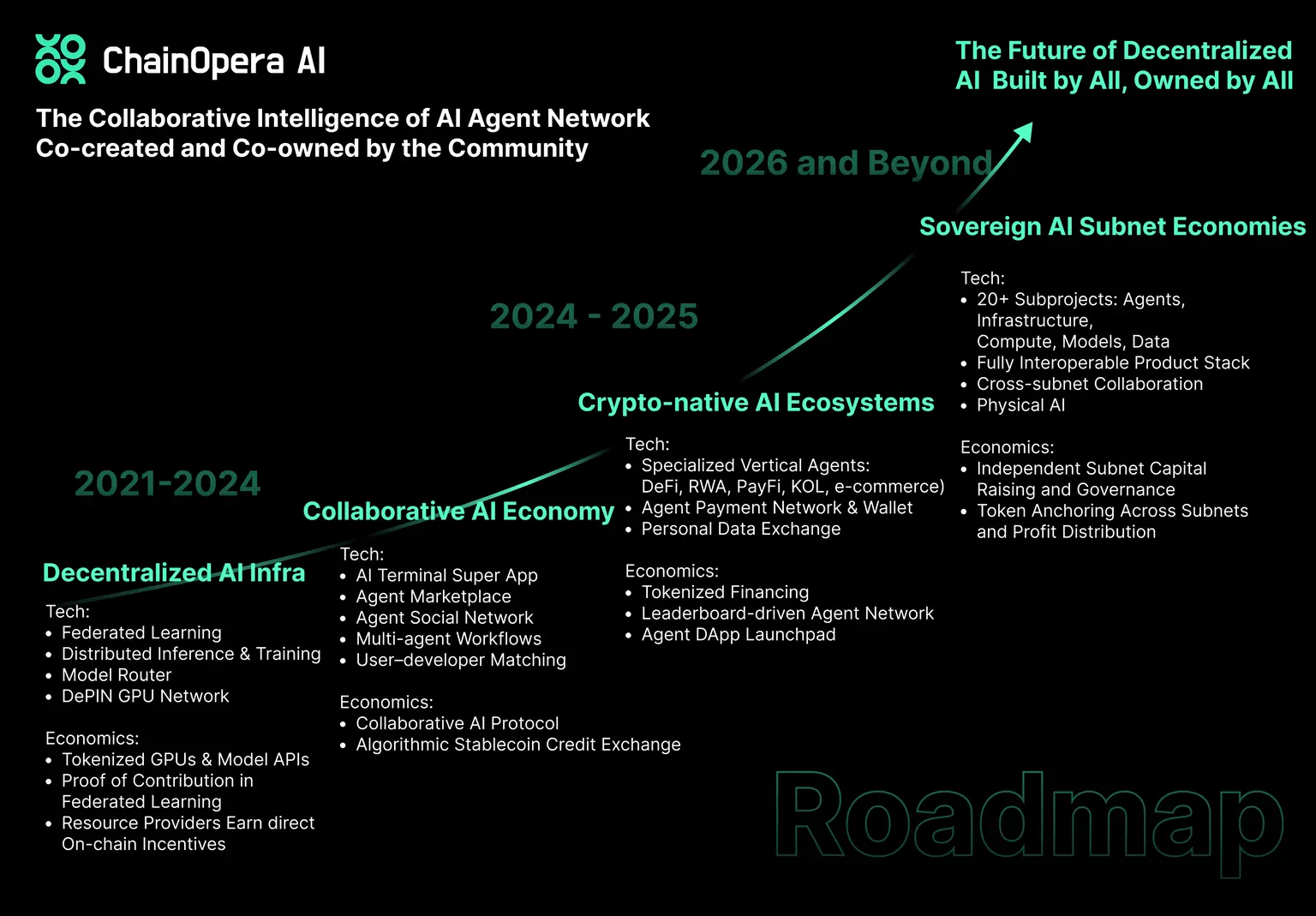

V. ChainOpera AI Roadmap

In addition to the officially launched full-stack AI Agent platform, ChainOpera AI firmly believes that Artificial General Intelligence (AGI) will come from multimodal, multi-agent collaborative networks. Therefore, its long-term roadmap is divided into four stages:

-

Stage One (Compute → Capital): Build decentralized infrastructure, including GPU DePIN network, Federated Learning and distributed training/inference platforms, and introduce Model Router to coordinate multi-end inference; incentive mechanisms allow compute, model, and data providers to receive usage-based rewards.

-

Stage Two (Agentic Apps → Collaborative AI Economy): Launch AI Terminal, Agent Marketplace, and Agent Social Network to form a multi-agent application ecosystem; connect users, developers, and resource providers through the CoAI Protocol, and introduce user demand–developer matching system and credit system to drive high-frequency interaction and sustained economic activity.

-

Stage Three (Collaborative AI → Crypto-Native AI): Deploy in DeFi, RWA, payments, e-commerce, and expand to KOL scenarios and personal data exchange; develop dedicated LLMs for finance/crypto, and launch Agent-to-Agent payment and wallet systems to drive "Crypto AGI" scenario-based applications.

-

Stage Four (Ecosystems → Autonomous AI Economies): Gradually evolve into autonomous subnet economies, with each subnet independently governed and tokenized around applications, infrastructure, compute, models, and data, and collaborate through cross-subnet protocols to form a multi-subnet collaborative ecosystem; meanwhile, transition from Agentic AI to Physical AI (robots, autonomous driving, aerospace).

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

Interview with RaveDAO Head of Operations: Breaking Barriers with Music, Enabling Real Users to Onboard to Blockchain Seamlessly

RaveDAO is not just about organizing events; it is creating a Web3-native cultural ecosystem by integrating entertainment, technology, and community.

Behind the x402 Craze: How ERC-8004 Builds the Trust Foundation for AI Agents

If x402 is the “currency” of the machine economy, then what ERC-8004 provides is the “passport” and “credit report.”

JP Morgan Forecasts BTC At $170K Amid Market Doubts

Bitcoin Loses Ground To Stablecoins, Says Cathie Wood