Meta temporarily adjusts AI chatbot policies for teenagers

On Friday local time, Meta stated that, in light of lawmakers' concerns over safety issues and inappropriate conversations, the company is temporarily adjusting its AI chatbot policies for teenage users.

A Meta spokesperson confirmed that the social media giant is currently training its AI chatbot so that it will not generate responses for teenagers regarding topics such as self-harm, suicide, or eating disorders, and will avoid potentially inappropriate emotional conversations.

Meta said that, at the appropriate time, the AI chatbot will instead recommend professional help resources to teenagers.

In a statement, Meta said: "As our user base grows and our technology evolves, we continue to study how teenagers interact with these tools and strengthen our safeguards accordingly."

In addition, teenage users of Meta's apps such as Facebook and Instagram will only be able to access certain specific AI chatbots in the future, which are mainly designed to provide educational support and skill development.

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

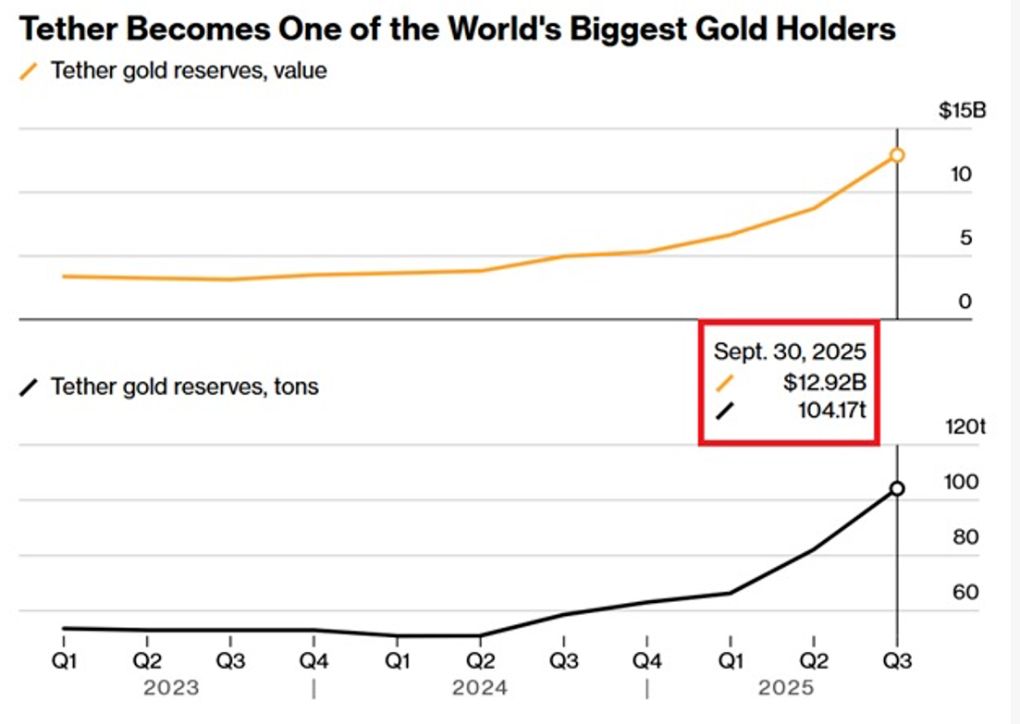

Digital dollar hoards gold, Tether's vault is astonishing!

The Crypto Bloodbath Stalls: Is a Bottom In?

Can the 40 billion bitcoin taken away by Qian Zhimin be returned to China?

Our core demand is very clear—to return the assets to their rightful owners, that is, to return them to the Chinese victims.