Zeno's Digital Twin Ideal and the Technological Popularization of DeSci

Carbon-based intelligence and silicon-based intelligence coexist under the same roof.

Original Title: "Zeno's Digital Twin Ideal and the Popularization of Technology in DeSci"

Author: Eric, Foresight News

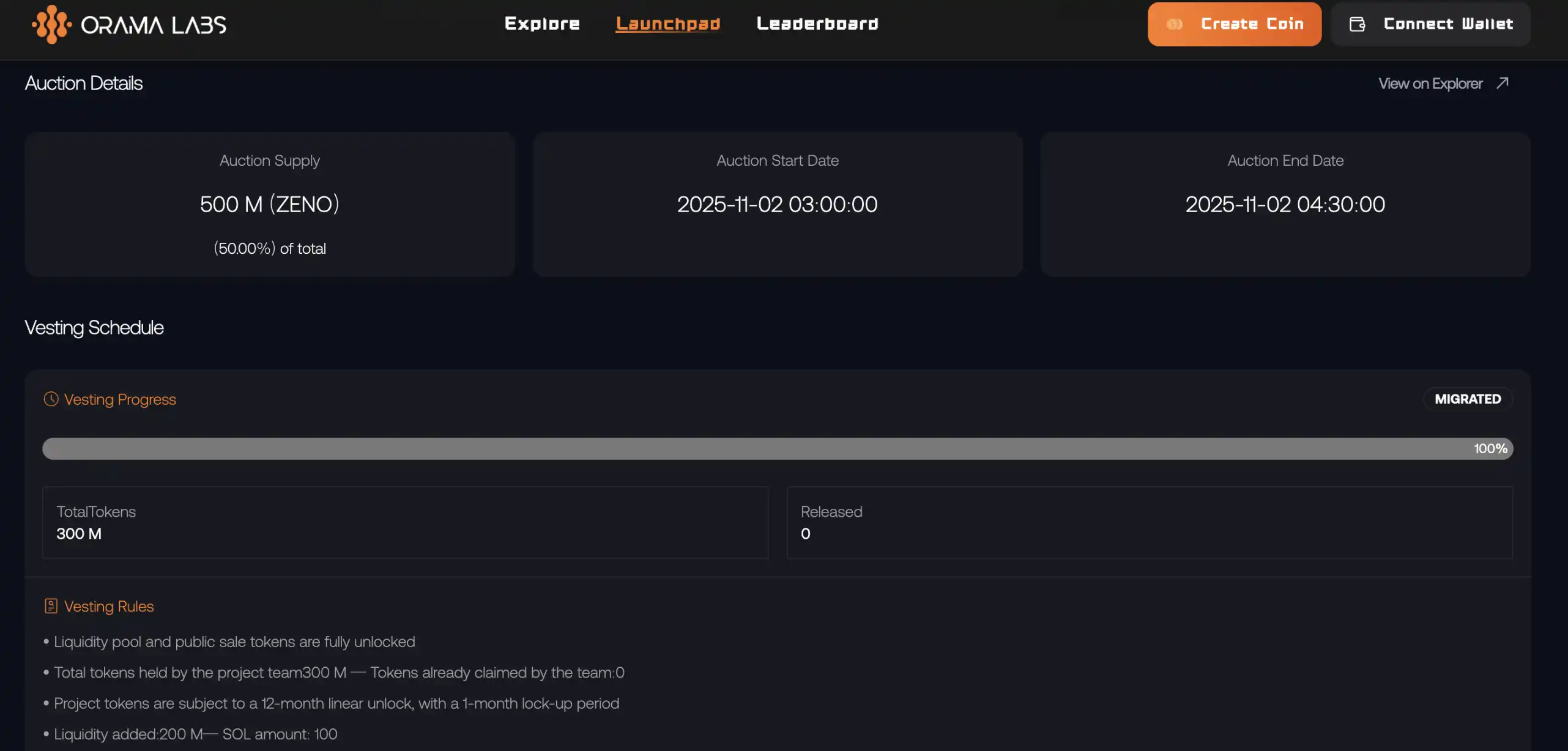

Just over a week ago, the DeSci platform Orama Labs successfully completed the token launch for Zeno, the first project on OramaPad. This time, Zeno provided 500 million ZENO tokens to the launchpad, accounting for half of the total supply. OramaPad required users to stake its token PYTHIA to participate, and this "opening show" attracted a total of $3.6 million worth of PYTHIA staked.

Orama Labs aims to address the inefficiencies in funding and resource allocation in traditional scientific research. Their solution is to establish a pathway from research to commercialization by funding scientific experiments, enabling intellectual property verification, solving data silos, and implementing community governance.

The first project on OramaPad adopted the Crown model, meaning the project must have a sound business logic system and/or strong technical development capabilities in the Web2 field, and its product must be highly practical. Orama refers to this as OCM (Onboarding Community Market). Unlike simple meme launches, Orama essentially provides a replicable on-chain transformation path for Web2 enterprises or teams with mature business models and technical capabilities. The first to take the plunge, Zeno, is also quite significant.

Hardcore Technology Beyond Comprehension

Zeno is an extremely ambitious project—so much so that if you only read Zeno's documentation, you might not fully understand what the team is actually trying to accomplish. It was only after communicating with the team that I grasped the full picture of this cyberpunk-style story:

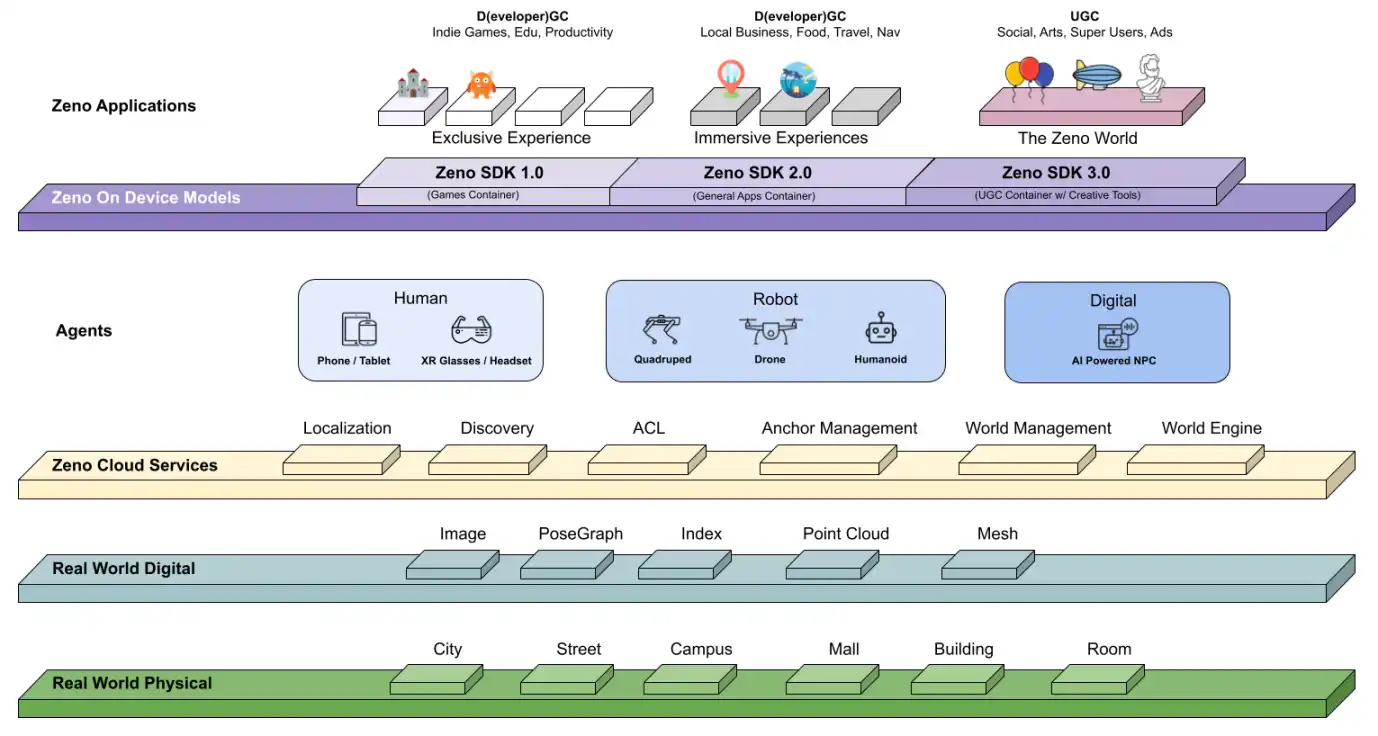

In short, Zeno aims to overlay multi-layered virtual spaces for AI and robots onto the physical spaces where humans live, so that all "intelligent agents," including humans, can coexist in the same space.

Imagine this scenario: one afternoon in the future, you're relaxing on a lounge chair on your balcony. At home, you have an AI butler connected to all your furniture and appliances, and a humanoid robot busy with household chores. Suddenly, you feel a bit bored and want to play a virtual passing game with your two "brothers" at home. So you put on your VR/AR glasses, and in this virtual world, the robot appears human, and the AI that only exists online also takes on a human form. The robot sits on the sofa, the AI sits on the floor, and the three of you pass a virtual basketball around while discussing what to eat for dinner.

This is Zeno's ultimate vision: enabling carbon-based intelligent beings and silicon-based intelligent agents to live together in the same physical space.

Many of us imagine cyberspace as a purely virtual realm, like the new world entered via VR in the movie "Ready Player One." Even our current interactions with AI are through flat interfaces like computer or phone screens. Zeno, however, hopes to bring these virtual spaces directly into real life, creating a "superimposed state" where the physical and digital worlds coexist in the same time and space. This would make digital content as "tangible" as physical objects, allowing humans, robots, and AI to interact naturally in real scenarios and build a mixed reality ecosystem where the virtual and real are in sync and humans and machines coexist.

Of course, the world we see may not be exactly the same as what robots and AI see. For example, you might not want the robot to wander into your study, so you can "lock" the study door in the robot's virtual world. Only after you "unlock" it does the robot have permission to enter the study.

Centered on Spatial Anchors

Living under the same roof as artificial intelligence sounds very futuristic, but there's a major prerequisite: you need to build a model of the real world in the virtual world to enable programmability on that basis.

This requires you to first have real-world scene data, which is a problem many companies, including autonomous driving tech firms, are researching. Take autonomous driving as an example: if you have real-world map data for an entire city, the AI doesn't need to drive around the streets to learn how to handle different situations—it can simulate road scenarios in the lab and continuously evolve.

Although the above isn't exactly "spatial overlap," it's still an important application of building real-world models. Zeno's ultimate vision can't be achieved in one step; the first thing it needs to do is collect real-world scene data.

Zeno has already launched a program that allows users to help record spatial data using their everyday devices, supporting both robots and glasses. As for mobile phones, the team says Google's ARCore is mature enough and doesn't require further development—users can simply use compatible models. The algorithms for constructing space from the collected data are independently developed by the Zeno team.

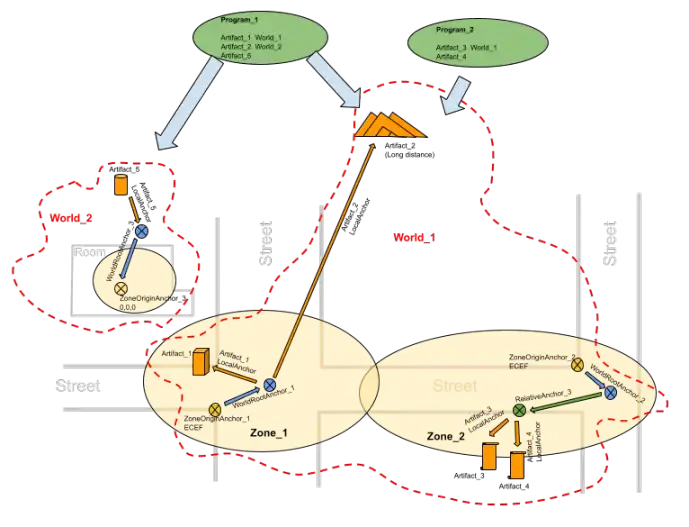

The core of building a world where the real and virtual coexist revolves around spatial anchors. From a technical perspective, the real world can't be programmed directly; its connection to the virtual world is established by associating real-world anchors and mapping out virtual spaces based on physical locations. To use an analogy: for robots and AI, the visible world is like an ocean at night, and these anchors are like lighthouses, illuminating each area for silicon-based intelligence.

The first step for Zeno to achieve its "ultimate goal" is to build a full-stack platform. In addition to everyday electronic devices like phones, it also supports professional equipment such as LiDAR, 360-degree panoramic cameras, and RGB cameras on mobile or XR headsets for data collection. The team says the Zeno platform will have a powerful cloud-based visual world model and computing system capable of processing gigabytes of raw sensor data daily for large areas (city/global scale) and indexing for rapid spatial queries. It can also process small-scale areas (room/anchor scale) in parallel for high-throughput real-time processing.

Additionally, the system is self-learning, continuously optimizing through high-quality and third-party data. In the future, it will support hundreds of spatial queries per second, provide precise six degrees of freedom (6-DOF) positioning results, shared spatial anchor creation, rapid 3D visual reconstruction, real-time semantic segmentation, and other scene understanding functions. It is highly scalable and can be widely applied in AR games, navigation, advertising, productivity tools, and more.

Verified spatial data and the spatial intelligence infrastructure layer it builds can be called by various decentralized applications—for autonomous driving route planning, end-to-end model data training for robots, generating verifiable auto-executing smart contracts, spatial advertising distribution, and ultimately enabling spatial data-driven decision-making and upper-layer applications.

Who is Behind Zeno?

Compared to some Web3 projects with vague visions, Zeno's goals, though complex, are very concrete. The reason the technical implementation is described in such detail in the project documentation is that the team members have been deeply involved in this field for years.

Zeno's team members all come from DeepMirror, also known as Chenjing Technology. If you're not familiar with Chenjing Technology, you may have heard of Pony.ai, which is listed on Nasdaq with a market cap of $7 billion. Chenjing Technology's CEO, Harry Hu, was the former COO/CFO of Pony.ai.

Zeno's CEO, Yizi Wu, was one of the early members of Google X and participated in the development of Google Glass, Google ARCore, Google Lens, and the Google Developer Platform. At Chenjing Technology, he led the overall AI architecture and World Model development.

Zeno's core team also includes Taoran Chen, a former research scientist at Chenjing Technology with dual PhDs in mathematics from MIT and Cornell, and Kevin Chen, former CFO of Chenjing Technology and former executive at Fosun Group, JPMorgan, and Morgan Stanley.

For the Zeno team, entering Web3 is more like a bold attempt by a technically-oriented Web2 team. The team says that the ZENO token will be used to incentivize users who provide spatial data, as well as teams or individuals who develop infrastructure tools, applications, or games using Zeno. In addition to the 500 million tokens distributed via the launchpad, the team retains 300 million, and the remaining 200 million, together with the 100 SOL obtained from the launchpad event, will be used to add liquidity to the trading pair on Meteora.

RealityGuard, a spatial application combining AR and gaming, developed by Chenjing Technology

When asked why they chose Web3 as their base, Zeno told me that spatial data is inherently a highly decentralized digital asset, naturally suited to the Web3 environment. The spatial data collected by Zeno will also be assetized in the future and traded using the ZENO token as currency, expanding ZENO's circulation within the ecosystem. The buyers will naturally be tech companies in need of spatial data. As for more application scenarios for ZENO, "they will be further explored as the project progresses."

Through Zeno, the role of the DeSci platform has become tangible. Science doesn't have to be an obscure, purely theoretical discipline. Like Xiaomi's approach to popularizing technology, lowering the threshold for technological value investment is also one of the important values of DeSci.

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

Bitcoin price fills CME gap, but '$240M market dump' stops a $104K rebound

$RAVE unveils tokenomics, igniting the decentralized cultural engine of global entertainment

$RAVE is not just a token; it represents a sense of belonging and the power of collective building. It provides the community with tools to create together, share value, and give back influence to society.

Interpretation of the ERC-8021 Proposal: Can Ethereum Replicate Hyperliquid's Developer Wealth Creation Myth?

The ERC-8021 proposal suggests embedding builder code directly into transactions. Along with a registry, developers can provide wallet addresses through the registry to receive revenue.